Portfolio Optimization_

-

Optimal: Choosing optimal allocates your portfolio based on

maximizing the Sharpe Ratio. Two methods are used:

- Monte-Carlo (random-weight) simulation wherein n-thousand portfolios are generated and the one with the maximum sharpe ratio is selected.

- Minimizing the Sharpe Ratio using Sequential Least Squares Programming (SLSQP) via Scikit.Optimize.minimize.

- Returns the best allocation of weights to optimize your portfolio.

- Enter at least one stock.

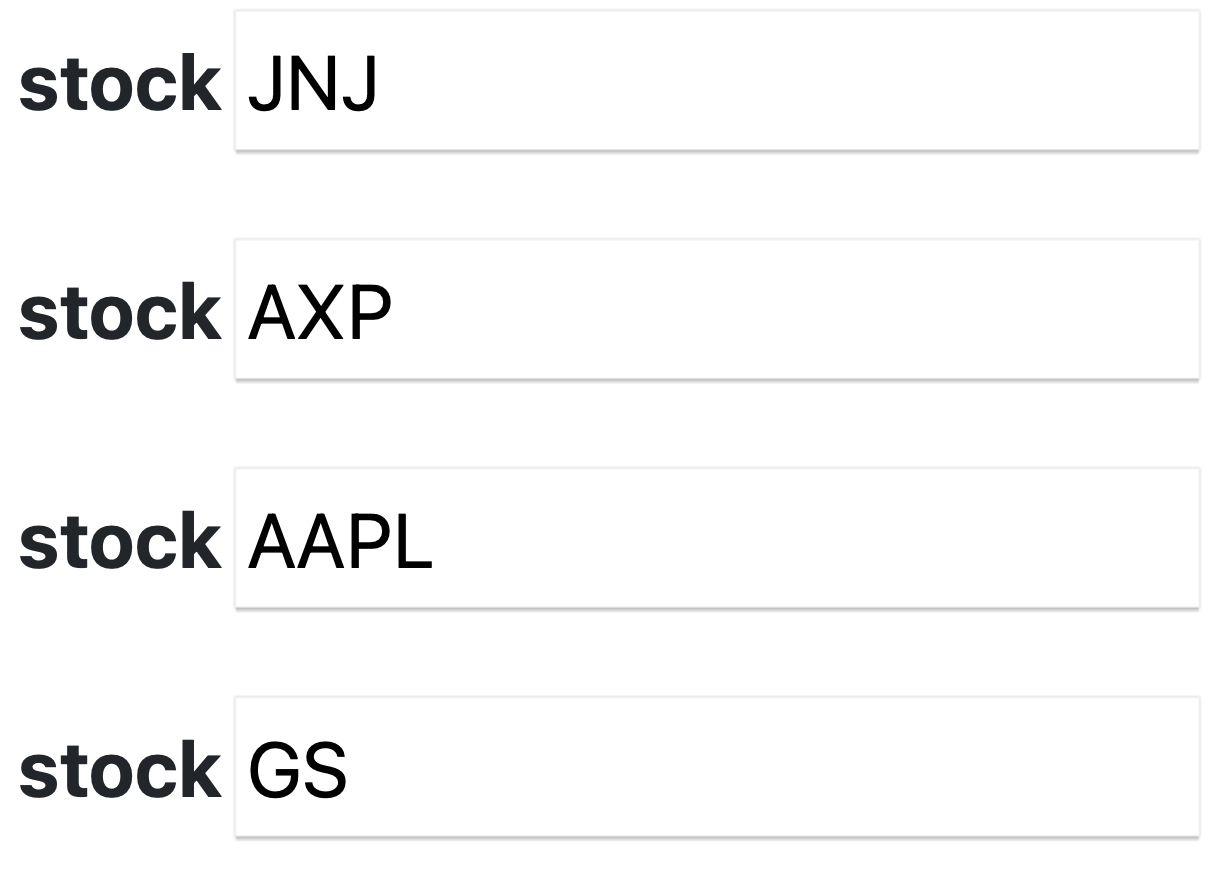

- Enter one stock ticker per row in all caps. Instead of entering Tesla for example enter their ticker 'TSLA'.

- Be sure each stock you enter is currently listed on an exchange. All entered values are cross referenced with this general list of stocks provided by Nasdaq:

- If you enter a stock that is not listed on an exchange you will be directed to return to the home page and re-enter your values.

- See the below image as an example:

Source: Introduction to Probability 2nd edition (Bertsekas, Tsitsiklis)

Probability

Random Variables and Probability Mass Functions (PMFs)

Given an experiment and the corresponding set of possible outcomes (the sample space \(\Omega \)), a random variable associates a particular number with each outcome of the experiment. This number is know as the value of the random variable. Mathematically a random variable is a real valued function of the experimental outcome. A random variable is discrete if the set of values it can take on is either finite, or countably infinite.

Each random variable can be associated with certain "averages" of interest (mean and variance for example).

A random variable can be characterized through the probabilities of the values that it can take on. For a discrete random variable \(X\), these are captured by the probability mass function of \(X\). If \(x\) is any possible value of of the \(X\), the probability mass of \(x\), denoted \(p_x(x)\) is the probability of the event {\(X=x\)} consisting of all the outcomes that give rise to a value of \(X\) equal to \(x\): \(p_x(x)\) = P({\(X=x\)}). Note that: \[\sum_{x} p_x(x) = 1\].

Expectation, Mean & Variance (PMFs)

Definition: The expected value (expectation, mean) of a random variable \(X\), with PMF \(p_x\) is: \[E[X] = \sum_{x} xp_x(x)\]

The Expectation of a random variable is the weighted average of the possible values \(X\) can take on (proportional to the probabilities of the outcomes).

Definition: The 2nd moment of the random variable \(X\) is the expected value of random variable \(X^2\). More generally, the n'th moment of a random variable is the expected value of the random variable \(X^n\). Denoted \(E[X^n]\). The first moment of the random variable is the mean.

Definition: The variance of a random variable is the expected value of the random variable \((X - E[X])^2\). The variance provides a measure of dispersion of \(X\) around its mean \(E[X]\). Squaring the random variable \((X - E[X])\) allows the variance to be nonnegative (taking on positive values or 0). \[var(X) = E[(X - E[X])^2] = \] \[\sum_{x} (x-E[X])^2 p_x(x)\]

In terms of Moments, the variance can be expressed as: \[ var(X) = E[X^2] - (E[X])^2 \]

Definition: The standard deviation, denoted \( \sigma_x\), is the square root of the variance: \[\sigma_x = \sqrt{var(X)}\]

Mean and Variance of a Linear Function of a Random Variable:

Let \(X\) be a random variable and let \(Y = aX + b \), where \(a\) nad \(b\) are scalars. Then: \(E[Y] = aE[X] + b \) and \(var(Y) = a^2var(X)\)

Variance of the Sum of Independent Random Variables :

If \(X\) and \(Y\) are independent, the \(var(X+Y) = var(X) + var(Y)\) and generally: \[var(X_1+ X_2 + ... + X_n) = \] \[var(X_1) + var(X_2) + ... + var(X_n) \]

Covariance and Correlation

The Covariance of two random variables \(X\) and \(Y\), denoted \(cov(X,Y)\) is: \[cov(X,Y) = \] \[E[(X-E[X]) (Y-E[Y])] = E[XY] - E[X]E[Y] \]

When \(cov(X,Y) = 0\), \(X\) and \(Y\) are uncorrelated. Properties of the covariance are listed below:

- \(cov(X,X) = var(X)\)

- \(cov(X,aY+b) = a \cdot cov(X,Y)\)

- \(cov(X,Y+Z) = cov(X,Y) + cov(Y,Z) \)

- If \(X\) and \(Y\) are independent then \(cov(X,Y) = 0\) and they are uncorrelated

Definition: The correlation coefficient denoted \(\rho(X,Y)\) of two random variables \(X\) and \(Y\) that have nonzero variances is: \[ \rho(X,Y) = \frac{cov(X,Y)}{\sqrt{var(X)var(Y)}}\]

\(\rho\) varies form -1 to 1 and can be viewed as the normalized version of the covariance \(cov(X,Y)\). If \( \rho \gt 0\) then the values of the random variables \(X-E[X]\) and \(Y-E[Y]\) "tend" to have the same sign (if \(\rho \lt 0\) then the values \(X-E[X]\) and \(Y-E[Y]\) tends to have opposite signs).

Variance of the Sum of Random Variables

The covariance can be used to obtain a formula for the variance of the sum of several (not necessarily independent) random variables. If \(X_1, X_2, ... ,X_n\) are random variables with finite variance then in the case of \(X_1, X_2\): \[cov(X_1 + X_2) = \] \[var(X_1) + var(X_2) + 2cov(X_1, X_2) \] Generally this is expressed as: \[var(\sum_{i=1}^n X_i) = \sum_{i=1}^n var(X_i) + \sum_{(i,j)| i \neq j} cov(X_i,X_j) \]